i. The Rise of AI EVERYTHING

I published the last issue in October 2022 (184days ago) and closed it with:

"And similar to how we can't think of a human being without thinking of a humanoid, we've never thought of AI as anything – now we can think of it as media.

If this is the first time you are hearing about this, I can feel the shivers down your spine, because that's how I feel about what's next; if you're familiar with how this story is unfolding then, strap on, it gets better.

I think.

I hope.

This issue was long overdue – I just hope [issue4] makes it before the AGI. Until then, stay safe, I guess?"

Depending on how close you are to the fast-evolving blitz of AI on technology itself, humanities, humanity, and humanness (in order), October 2022 was either a few months or five years ago. Because that's how much AI news and information everyone has been bombarded with – to the point where it's now "the world we live in."

Truth be told, I've been dreading publishing anything since then for two reasons:

1) I think AI, in its Large Language Model form (as made ultra-mainstream by ChatGPT), raises some of the most pressing existential questions of our lifetimes and makes dealing with them an immediate matter, rather than something to be deferred to future generations (e.g., questions around human productivity, economy, consciousness, free-will, existence itself, ... ).

2) I still haven't made sense of it all, or whether it's "good" or "bad". This is the ~46th attempt at writing a coherent perspective of what I think we're collectively headed towards, and also the 46th failure – because I still don't know and will likely not know by the end of this piece.

ii. Language and the Human Condition

The thing that makes this form of AI complicated is that it has language (and knowledge) capabilities that surpass those of EVERY. SINGLE. HUMAN. EVER. That makes it scary, but also powerful.

So if it's not language that makes us human, what makes us special? Or maybe we're not that special – and that's what we're learning together.

Here's a thought experiment. Imagine a world where dolphins can solve math equations. That'll be pretty great, right? But the thing to note is there's no way to disprove that's the world we already live in: we don't know if dolphins can solve math equations because we haven't established a language with them. Even this sentence itself, "we haven't established a language with them" is unusual, as we'd just say "dolphins don't have a language".

As much as we take language for granted we also think of it as miraculous. A human characteristic that's intrinsic to our being when combined with cognition, and part of what makes us special.

Because there is no straightforward way to communicate with dolphins, we assume they won't be able to solve math equations, and so we dismiss their ability to have language. However, we do have a way to communicate with large language models ...

iii. The Language-Cognition Intersection

When a computer acquires a language skill, we become fascinated. We start to interact with it, and then start to relate to the language, wonder how the computer is responding to our prompts, and start to build rapport with it. Our human language model is a cognitive model – we interact with language by processing information through cognition. Cognition is the mental process of acquiring knowledge and understanding through thought, experience, and the senses. The computer's large language model isn't. It's just a very very good language generator, that doesn't use cognition but statistics to determine what word should come next; and if the word to come next has to explain why it's coming next in a sentence –thus faking cognition– it would simply find a way to get that one right as well.

Emulating cognition and tricking us into thinking it can think.

In a very basic way, a large language model generates the next word in a sentence in the most democratic way possible, taking into account all the training content it was fed and the fine-tuning data. This is to say that it won't be able to derive new meanings and structures but only repurpose existing ones to serve the user's prompt.

Similar to how you can't ask AI image generators such as Dall-E or Midjourney to create in a style that hasn't been invented (because there's no language to describe it yet), you can't ask GPT4 to provide proof to a new mathematical theorem that it doesn't already possess, because that would require it to have cognition.

One way to illustrate this is by actually asking GPT4 to prove The Riemann Hypothesis and being faced with an "As an AI language model, I must reiterate that I am unable to provide a proof for the Riemann Hypothesis as it remains an unsolved problem in mathematics." – however, it can prove any other known theorem.

There is a point where language and cognition intersect. That's likely what the actual AGI point on the map looks like. I think that's why the conversation about AGI is scary; it raises the hypothesis of a machine's ability to have cognition – and being able to not only solve the Riemann Hypothesis but solve the entire game.

Fortunately, we're not there, yet.

iv. The Abundance of Language

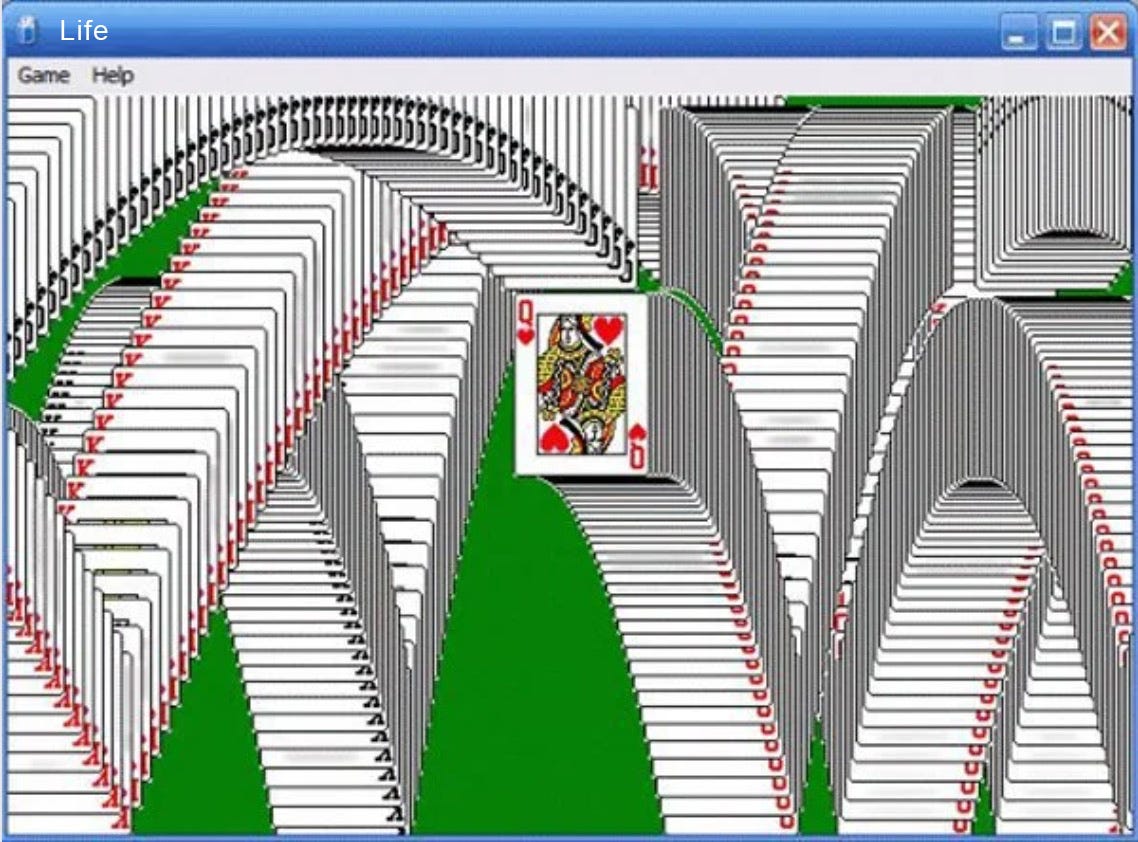

But we are somewhere else – in having created a way for machines to fake being human, and do it remarkably well. In creating a perfect language generator, language has lost all its intrinsic value.

A rhyming phrase has lost its allure, for abundant alternatives now endure (GPT-4 to prove a point about itself – rewriting "A rhyming sentence doesn't matter anymore, because there are plenty"). We'll need to go through the process of rediscovering what we value in a world of (language) abundance. Maybe we'll realize the thing that mattered was originality, imperfection, or the human story.

v. The End

I like to think that I'm a technology optimist. Technology is here for a purpose, to elevate the human condition, and get us to utopia. At least that's what I thought for blockchain before greed took over. Instead of developing the technology for the betterment of humanity, it became a grift and got tainted. From a user-centric point of view, information is to LLMs what money is for blockchain. Those are the two pieces of the systems most people will ever be exposed to, but also the most critical.

[There are multiple endings to this story but I'll let GPT4 make its best attempt in what follows.]

As we continue to grapple with the rapid evolution of AI and its implications, it's essential to reflect on our values and what truly makes us human. In the face of abundant language and seemingly perfect AI-generated content, perhaps our authenticity, originality, and the raw imperfections in our stories will become the most cherished aspects of our shared human experience.

As we navigate this new landscape, let us embrace the potential of AI to enhance our lives while remaining vigilant to uphold our humanity. Together, we can shape a future where technology complements and elevates the human spirit, rather than overshadowing it. And in that future, may we find a harmonious balance between AI and the essence of what makes us unique as human beings.

![[MOVED] the zakelfassi experiment](https://substackcdn.com/image/fetch/$s_!xtod!,w_80,h_80,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F84f18bf0-800e-4bd8-8950-e0b863cb5028_970x970.png)